Development Update: InsightAI Agent Proof-of-Concept

In an era where AI systems make increasingly consequential decisions, the "black box" problem remains a critical barrier to trust and adoption. Today, we're excited to unveil our proof-of-concept for a revolutionary approach to AI explainability that doesn't compromise on capability.

The Problem with Black Box AI

Traditional machine learning models, especially deep neural networks, operate as impenetrable systems where inputs go in, outputs come out, but the decision-making process remains hidden. This lack of transparency creates significant challenges:

Regulatory compliance issues in high-stakes industries

Difficulty identifying and correcting biases

Limited trust from end-users and stakeholders

Inability to verify model reasoning or audit decisions

Our Novel Architecture: The Best of Both Worlds

At Transparent AI, we've developed a groundbreaking approach that combines the accessibility of conversational large language models (LLMs) with the transparency of deterministic, explainable machine learning systems.

Our patent-pending architecture creates a powerful synergy:

Conversational Interface: A large language model serves as the front-end, making complex data analysis accessible through natural conversation

Explainable AutoML Core: Our proprietary insightAI platform powers the backend, automatically building interpretable models

Complete Auditability: Every step in the process is logged in a blockchain ledger, creating an immutable, cryptographically verified record

How It Works: A Revolution in AI Interaction

The system creates a seamless workflow that transforms how users interact with AI:

Problem Exploration: Users converse with the LLM about their company, operations, research questions, or strategic goals

Process Optimization: The LLM helps identify potential process improvements and optimization opportunities

Data Preparation: Working collaboratively with users, the LLM assists in identifying and preparing relevant data

Automated Model Building: Our insightAI platform automatically detects the problem type (classification, regression, etc.) and builds optimized, explainable models

Interpretation & Insights: The LLM interprets model outputs, explaining correlations, potential biases, and decision factors in plain language

Domain Expertise: Specialized LLMs can be deployed for specific contexts (healthcare, finance, etc.) to provide deeper domain knowledge

Proof-of-Concept: Transparent Decision-Making in Action

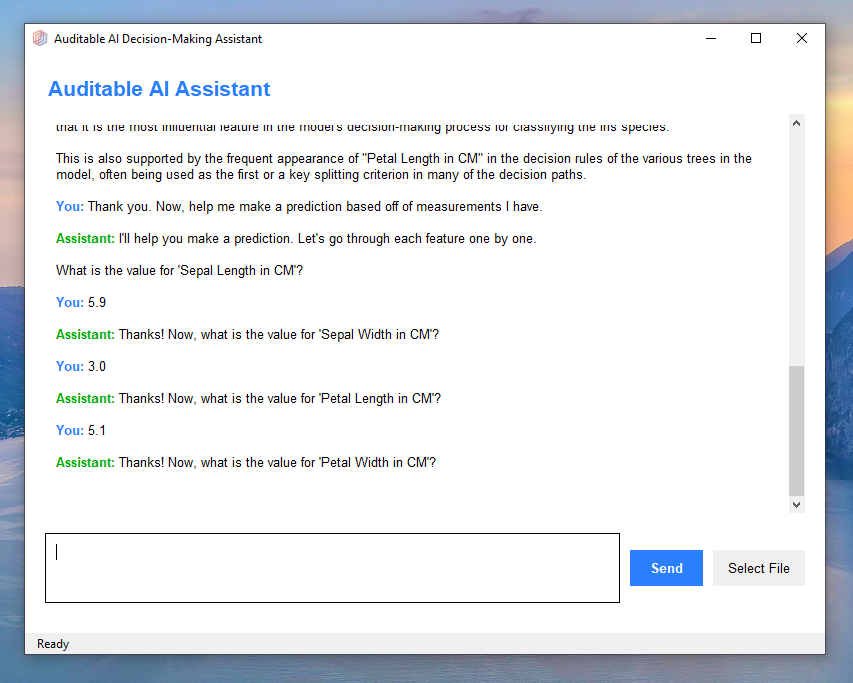

We've developed a working proof-of-concept using Python and the classic Iris dataset to demonstrate the power of our approach. The workflow showcases how our system delivers truly explainable AI:

The conversational agent begins by asking how it can help

When given a problem focus, it requests relevant data (in this case, accessing a pre-prepared CSV file)

Our automated machine learning system builds a rule-based model and captures key metrics: rules, statistics, feature importance, and performance

Users can freely question the agent about the data or model characteristics

For classification tasks, the agent helps collect necessary input data

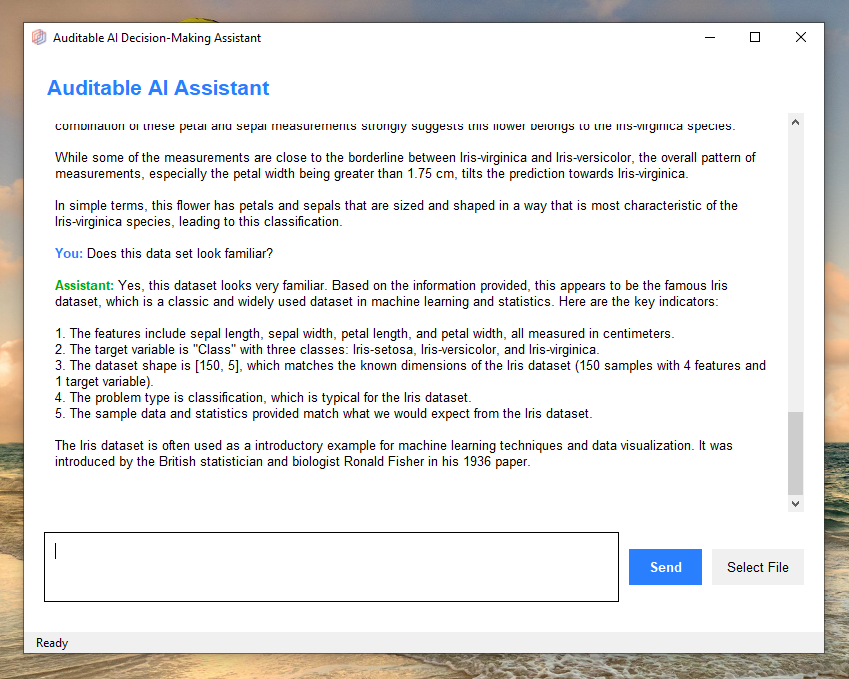

The deterministic ML model makes the classification decision

The LLM interprets and explains the specific rules that led to that classification

Throughout the process, the LLM demonstrates contextual understanding, recognizing the Iris dataset and its significance

What makes this revolutionary is that the LLM isn't making the classification decisions - it's interpreting the reasoning behind decisions made by a fully transparent, rule-based system. This is categorically different from approaches that simply have black-box systems attempt to explain themselves after the fact.

The Road Ahead: Building Toward Trusted AI

Our proof-of-concept demonstrates the potential of our approach, but we're just getting started. Our development roadmap includes:

Building out the complete autoML platform with support for a wider range of problem types

Implementing the blockchain-based ledger system for comprehensive auditability

Developing more robust LLM integration for both pre-model building (problem definition) and post-model building (interpretation)

Creating specialized LLMs for key industries with unique regulatory and domain requirements

Beyond Explainability: A Pathway to AGI

We believe this system represents more than just a solution to the explainability problem - it may offer a pathway toward artificial general intelligence.

By building an agent that can identify problems, source relevant data, build predictive models, and interpret results, we're creating a generalized problem-solving system. The ability to use diverse tools is one of the primary indicators of intelligence, and our recursive approach - building, interpreting, and extracting insights from predictive models - represents a form of extended reasoning.

Most importantly, by ensuring every step remains transparent and auditable, we're creating AI systems that can be truly trusted with increasingly complex and consequential decisions.

Stay tuned for more updates as we continue to develop this revolutionary approach to explainable, auditable, and trustworthy AI.